DevOps and Software Development Life Cycle

Software development is a constantly evolving discipline that has transformed the way we interact with technology in our daily lives. From mobile applications to complex enterprise systems, the software development process plays a fundamental role in creating and improving the technology solutions that surround us.

In this article we will dive into the world of the software development lifecycle, a structured and carefully planned process that spans from the conception of an idea to the delivery of the final product and subsequent replacement/removal of the host system. We will discover how it addresses each crucial stage, from requirements assessment to deployment and maintenance, ensuring that projects are developed efficiently and with the highest quality.

Moreover, we will detail the fundamental role that DevOps methodology plays in the process, fostering a culture of collaboration and close communication between development and operations teams, automating processes and adopting a continuous improvement mindset.

Finally, special focus will be placed on the possibility of encompassing several different stages of the code lifecycle under a single solution. This is an ambitious but highly beneficial goal that is growing in the industry due to the ability to reach a larger market niche and to provide centralization, which brings numerous benefits to the end user.

What is lifecycle and why is it important?

Also known as SDLC, acronym that comes from its name in English Software Development Life Cycle. The software development life cycle is a representation of the complete process that a software project follows from its conception to its completion and subsequent maintenance.

Although there are different software development cycles the ISO/IEC/IEEE 12207:2017 standard states:

“A common framework for software life cycle processes, with well-defined terminology, to which the software industry can refer. It contains processes, activities and tasks applicable during the acquisition, supply, development, operation, maintenance or disposal of software systems, products and services. These life cycle processes are carried out through stakeholder involvement, with the ultimate goal of achieving customer satisfaction.”

Its importance is motivated by the boom that the sector is experiencing, driven by the digitalization of the world in which we live; causing the demand for professionals to grow and the need to define optimal and standardized processes to appear.

What are its stages?

Once we have explained what the life cycle is and why it is so important when developing software, we will detail into which stages it is divided and what is the objective of each one of them.

Planning

This initial stage focuses on identifying the scope of the project and its requirements for further analysis. The goal of this stage is to understand the purpose of the project and the client’s needs and desired outcomes. SMART objectives will be determined: Specific, Measurable, Achievable, Realistic and Time-bound.

Design

Once the information described in the previous stage has been analyzed, the steps to be taken to achieve that objective – the “how” of completing a project – will be determined. An abstract model of the system to be built will be elaborated, based on the requirements raised in the previous phase. This model provides details about the software to be developed, including system architectures, data structures, user interface design, and other technical aspects necessary to implement the system. An allocation of costs, timelines and milestones, and required materials and documentation will be established. This stage also involves calculating and forecasting risks, setting up change processes and defining protocols.

Implementation

In this phase of the life cycle the implementation of the software product is performed, in other words, where the source code will be generated in the programming language chosen to suit our project.

Testing

Once the development and implementation process is finished, the testing phase begins, which consists of testing the implemented design. In this stage we test the errors that may have appeared in the previous stages. It is a phase of correction, elimination and improvement of possible failures, not foreseen in the previous steps. For this purpose, the following processes are common: unit tests, integration tests, system tests and acceptance tests.

Deployment

Once the software has passed the tests satisfactorily, we proceed to its deployment in the desired environment, whether it is a pre-production or production environment. This will depend on the client’s casuistry and associated with it there will be a workflow.

Maintenance

In this period the software is already in operation. Over time, some functions may become obsolete, some limitations may be detected or proposals may appear to improve the stability of the project, so a maintenance phase is necessary. This involves bug fixes, periodic software updates, or implementation and deployment of improvements.

What is the importance of the DevOps methodology?

The DevOps methodology is a software development approach that seeks to integrate collaboration and communication between the development (Dev) and operations (Ops) teams to achieve faster, more efficient and reliable software delivery.

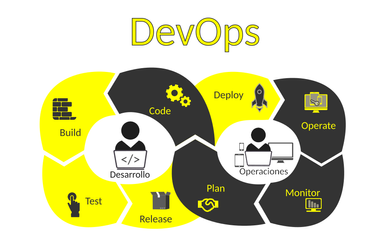

Looking at the stages of the DevOps cycle in the image above, they are very similar to the stages that make up the software lifecycle. In other words, the DevOps methodology embraces the software lifecycle by adding a change of mindset that greatly improves efficiency and communication between the development team and operations. As colloquially defined, DevOps is the oil that makes the gears turn in an optimal way.

What these improvements are and how they are achieved are detailed below:

- Collaboration: cooperation between the different areas of the organization is promoted at all times, the entire team is involved in each of the stages.

- Automation: we seek to eliminate human action from all repetitive processes, thus achieving an exponential increase in reliability in critical processes, such as software deployment in a productive environment, and reducing moments of tension between the different areas. This point is vital for the improvement of interpersonal relations.

- Continuous Integration (CI): aims to automate and facilitate the frequent integration of changes in the source code of a project in a shared repository. In essence, it seeks to ensure that new or modified code is incorporated into existing code on a regular basis, so that errors, both in code quality and security, and potential conflicts can be quickly detected and resolved.

- Continuous Delivery (CD): extends the concept of continuous integration by automating the deployment of applications in the desired environment after successful testing. In the event that these tests are not positive, the continuous delivery process will be blocked until these results meet the requirements set. This aspect is fundamental to achieve an exhaustive control over the source code that is deployed in productive environments, since this task becomes more complicated as we evolve to more complex equipment/infrastructures.

- Scalability and flexibility: DevOps methodology adapts well to agile development environments and distributed systems, allowing adaptability according to needs and automated deployment of applications in complex environments.

- Monitoring: DevOps promotes the observability and constant review of the performance of applications and the servers where they are hosted. The objective of this process is to collect as much information as possible from the system to be able to anticipate the appearance of future real problems. If it is not possible to anticipate the error, the aim is to resolve it in the shortest possible time by means of automated processes that considerably reduce the time it takes to deploy the solution.

A new challenge appears on the horizon

The software development life cycle is a “big pie”, which was clearly divided by its stages and in each of them coexisted a group of companies that were in charge of solving the existing problems.

As we have seen above, each stage is related to each other, but is sufficiently different from the rest to make it impossible for one of these large companies to encompass more than one, due to the complexity involved.

However, nowadays, companies are seeking to achieve this goal. Two clear examples are GitHub and GitLab, among others, which were born as version control tools based on git. Following on from the above, these examples initially belonged to the implementation stage, but far from being pigeonholed, they have chosen to open up the market and cover later stages. In the case of GitLab, they have opted to go a step further and also include the planning stage, a stage that is quite dominated by ticketing tools such as Jira, or confluence for documentation.

With this change of mentality, in addition to increasing revenues by being able to reach a larger target audience, there are a number of advantages to be taken into account:

- Homogenization: the diversity of applications within the architecture is eliminated, providing the end user with a better experience and making his day-to-day life much more comfortable by having only one solution with a single domain. This eliminates the need to look for solutions based on an LDAP protocol, allowing the management of users to several different applications in a centralized way.

- Elimination of integration processes: by encompassing a large number of processes in a single application, the effort associated with the configuration and subsequent maintenance of integrations between different applications is eliminated.

- Reduction of the cost associated with the maintenance of the different applications: by having only one solution, it is no longer necessary to maintain a set of applications, each with its own peculiarities and corresponding maintenance.

- Reduction of failure points and improvement of performance: being encompassed under the same solution reduces the weak points in which errors can appear, in addition to eliminating the delays generated by communication between applications, thus improving the performance of the process.

- Exponential improvement of traceability: by centralizing several processes under the same solution, it is possible to track the entire process in a more intuitive and simple way. There is the possibility of drawing a completely clear line between ticket generation during a Scrum session and code deployment in the production environment.

- Big data: increases the ability to extract information related to the processes, this will allow the creation of ‘value stream’ graphs and DORA metrics, from which users will be able to find improvement points and achieve a much more efficient process.

- Reduction of technical debt: technical debt has been commonly associated to the code development area, but it is very important to relate it to the architecture used and its possible failures. Taking into account the above mentioned, a single solution avoids redundant functions, duplicated expenses and operational overload. It also facilitates the governance of the application, which has a positive impact on the developers’ experience.

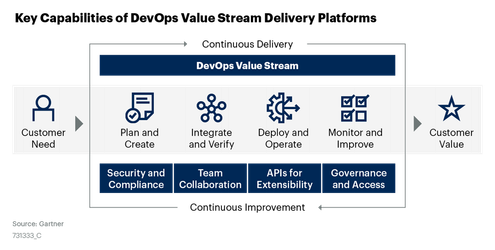

As a result of this evolution, the DevOps Platform concept emerges, which is defined as a set of tools, practices and services that enable development and operations teams to collaborate effectively and automate the development lifecycle, providing a unified environment where teams can work together more effectively, automate key processes and improve efficiency in software development and operation.

Alternatively, a fragmented DevOps toolchain requires integration between the various tools to work in an orchestrated manner, maintenance (including patching and upgrading to the latest version) and constant configuration-level tuning.

These time-consuming tasks take time away from effective day-to-day work, minimizing the value delivered to the end customer. DevOps Platform providers aim to accelerate the delivery of value to the customer by offering a fully integrated, managed set of capabilities with native support for orchestration.

The real cost of DevOps

We are going to perform an estimation exercise that allows us to compare the cost of a DevOps toolchain with the cost of a DevOps Platform.

This assumption will be made for a company with 100 developers and an annual development budget of $140,000. The company has an efficient DevOps model, which allows them to make between 5 and 15 daily deliveries. This DevOps model supports different technologies and programming languages.

The approximate cost of a DevOps platform

Making a generic calculation on the different options of DevOps Platforms in the current market, the average value of a subscription amounts to $25/user/month, which means for the case of a team of 100 people an annual cost of $30,000.

The maintenance of a DevOps platform in SaaS mode is paid by the manufacturer, so the user does not have to bear this cost. It should be noted that this will change if the company consuming the product chooses a self-managed solution, in which case the company will be responsible for the maintenance and the costs will increase.

The approximate cost of a toolchain

Since each company can use a different combination of opensource/enterprise tools to cover the entire code development lifecycle, we have chosen from the possible options the most commonly found, consisting of:

- Ticketing tool, in which we have an inventory of tasks, which clearly describe the different actions to be performed and the status in which they are.

- Code version control, an essential tool in software development and collaborative project management. Its main function is to track and manage the different versions of files and source code over time.

- Code quality control, designed to analyze and evaluate the source code of a software program in order to identify problems, apply good programming practices and improve the overall quality of the code.

- Security monitoring, solutions designed to protect computer systems, networks, applications and data against threats and vulnerabilities. These tools address a wide range of cyber risks, from malware and hacker attacks to regulatory compliance issues.

- CI/CD engine, a tool or platform that automates and facilitates the process of integration, testing and code delivery in software development.

- Artifact repository, a centralized repository where software components generated during the development, compilation and build process of a project are stored and managed.

There is a cost associated with the maintenance of each of the components of the chain that is not always contemplated (opensource, or enterprise for which the licensing cost is not included), since it is usually diluted in other activities, resulting in an approximate value of $37,000.

When compared to the value obtained from a DevOps platform, it is not as far off as you might think, and is even lower. But the cost is there, and it is therefore advisable to make calculations that allow you to make the right decision about what is more beneficial.

Where is the market heading?

At this point, several questions arise on the horizon: Which option is going to be the preferred one by consumers? Will there be a change of mentality towards the use of DevOps platforms?

The use of DevOps platforms is expected to increase exponentially in the coming years. This will be spurred by the growth of the industry, causing organizations to need to modernize their applications, which requires a fundamental change in the underlying tools. DevOps platforms will be increasingly in demand as organizations adopt agile and DevOps practices in order to be able to deliver diverse applications, including cloud-native apps, mobile apps, web Edge and IoT.

According to a Gartner estimate by 2027, 75% of organizations will have shifted from multiple solutions to DevOps platforms in order to streamline application delivery, up from 25% today (2023).

Gartner also estimates through its magic quadrant which are the main players and evaluates them based on their strengths and weaknesses, defining which solutions are the benchmarks in the sector and which may become so in the future.

Author: Hugo Mendiguchia Gutierrez